I think one of my best ideas was “vocable synthesis”, which my friend Lucy just reminded me of. I wrote a chapter on it in my doctoral thesis (chapter 3 – words), an ICMC paper in 2008 called Vocable Synthesis, and a later 2009 NIME paper called Words, Movement and Timbre. I got a kind of proof of concept working with a triangular waveguide mesh working but the results weren’t very musical. The ICMC paper has had zero citations (from people other than me), the NIME one got a bit more response but not much. But I still think it is a really exciting idea and think someone could have a lot of fun with it.

Basically the idea is that vocal tracts are extremely complicated, and words are a lovely, compact way to describe sounds in terms of articulations/movements, based on our tacit knowledge.

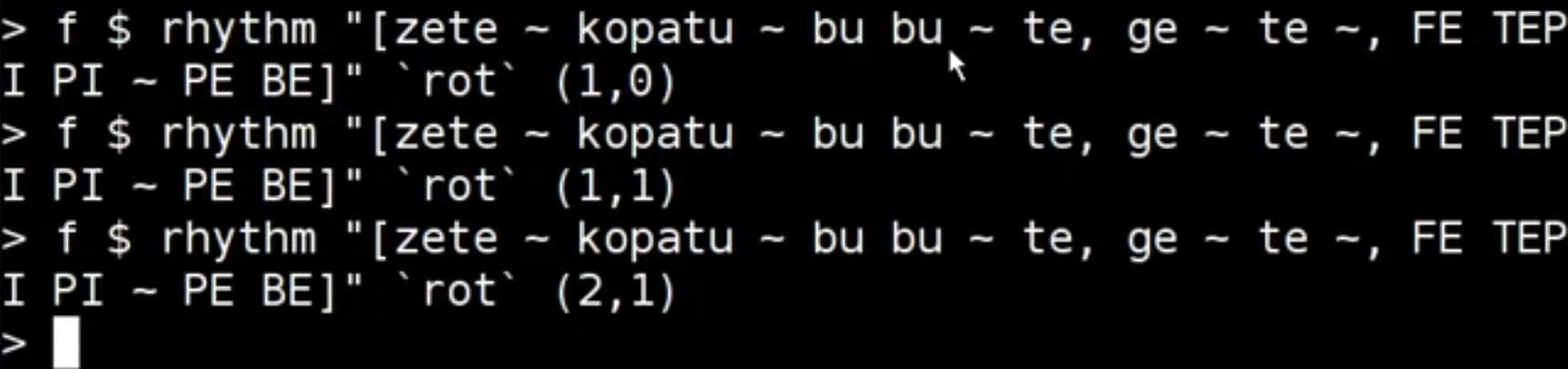

Drums (and other instruments) are less complicated than vocal tracts but could be controlled in the same way. The rich history of using non-lexical vocable syllables in music tradition (bol syllables, cantairreachd etc) point to this being a rich seam for interaction design in music. For practices like live coding, the possibilities of describing sounds with words is exciting in a lot of ways.

Why did this idea go nowhere? Maybe people are just too used to minute control over sound in sequencers/DAWs, and the idea of describing sound in rich, but vague ways doesn’t appeal? Indeed Canntaireachd is barely practiced since the sheet music has become dominant. I really have no interest in staff notation myself, but it has a lot of power, and seems to have taken over oral practices like Canntaireachd in Europe. Maybe this means that people can’t see the possibilities of notation based on speech?

Or maybe the idea is just a bit too easily misunderstood for people to pick up and run with. It’s a bit like onomatopoeia, but translates the idea from mapping from words to sounds via a vocal tract, to mapping from words to sounds via articulations of a drum and drum stick. So the aim isn’t to make drum sounds that sound like speech at all! But to make a way of making drum sounds that work in an analogous way to text-to-speech. Maybe people say ‘ah, this is onomatopoeia, so like those projects making pianos sound like speech”. That isn’t the idea at all.

Similar to the lack of interest in vocable synthesis, articulatory speech synthesis has not taken off beyond early research. It’s easier to do concatenative speech synthesis, and now ‘AI’-driven speech synthesis, rather than model the (again, extremely complex) vocal tract as a physical model. The lovely “pink trombone” software is a lot of fun to play with though, and people have used it in music performance, so maybe we aren’t far from a vocable synthesis breakthrough..

Or maybe it’s just a bad idea.

Here’s some proof-of-concept videos I made at the time anyway, that maybe didn’t really prove the concept..

@yaxu do you know if anyone has done a "reverse pink trombone" (or related research), ie. something that maps vocal audio input to the model parameters? (could be used as control input, envelopes, etc) I solved pitch detection to my satisfaction once (low noise/error/latency), but couldn't figure out mapping vowels into vowel space (3d-ish). it seems hard and non-trivial ("vocal tracts are extremely complicated").

Remote Reply

Original Comment URL

Your Profile

@sqx @yaxu@slab.org I think @danstowell has done this sort of thing, or might know some useful search terms at least..

Remote Reply

Original Comment URL

Your Profile

@yaxu@post.lurk.org @sqx @yaxu@slab.org Yes, and in the deep learning era there's been a lot more – it's now often called "real-time audio style transfer" etc

Remote Reply

Original Comment URL

Your Profile

Alex, I really like this concept and I’m really inspired by your other works and what you’ve accomplished. How can I get this working on my own machine? I searched through your blog a little and wasn’t able to find any resources and some links don’t seems to be working now. Thanks!

Sorry I don’t know where I’d begin to try to get any of this stuff working. The triangular mesh synth got added to supercollider’s sc3-plugins though as ‘membrane’, in case that’s useful !

If there’s particular broken links you’d like me to resurrect I can have a go.