This is a big one for me – an upcoming collaboration with B C Manjunath and Matt Davies, exploring carnatic…

Algoraves are here right now

I just happened on a pandemic-era article about algorave from 2022. It’s really nicely written and researched, and I especially…

Following instructions

I once went to a workshop run by the poet John Hegley, he got us making poetry booklets out of…

Tidal – a history in types

Some notes from the embedded conference “working out situated universality” hosted by Julian Rohrhuber at IMM Duesseldorf. If you’re reading…

Modulating Time

I’m just back from a productive week working with Mika Satomi and Lizzie Wilson on a mini project ‘Modulating Time’…

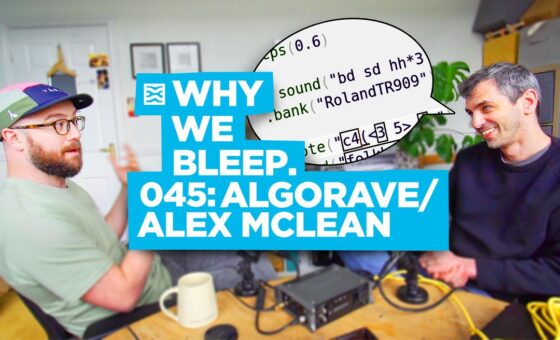

Why we bleep podcast

This was a fun chat with Mylar Melodies for his Why We Bleep podcast, about algorave, tidalcycles/strudel, live coding and…

Algorithmic Pattern updates

I’ve been a bit behind on blogging in general, but made five posts in the Algorithmic Pattern blog yesterday.. On…

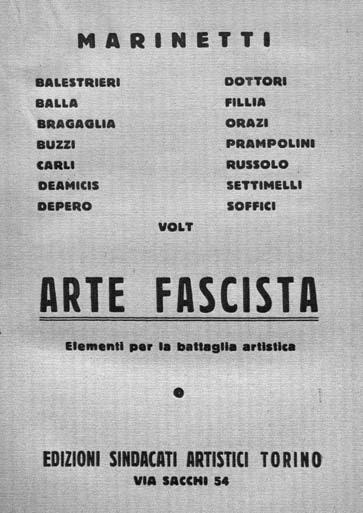

Luigi Russolo, Futurist and Fascist

I publish this blog post with some nervousness, as I’m not a historian or musicologist. This is something I feel…

Slow Growth of Mastodon

I used to use twitter quite a bit, but now it’s run by a hard-right weirdo who wants to defeat…

My publications list – updated

My publications list was missing some entries and many of the PDFs. I started uploading everything to Zenodo, but although…